On August 27, 2006, just after 0600 Eastern time, Comair Flight 5191, a Bombardier CL-600 regional jet, began taxiing for its scheduled departure from Blue Grass Airport (KLEX) in Lexington, Kentucky, to Atlanta, Georgia. Night visual conditions prevailed. In the control tower, the lone controller busy with daily administrative duties cleared the flight to depart from Runway 22. After a brief taxi, the captain and the first officer believed they were on the designated Runway 22 and began their takeoff roll. Instead, they had taxied onto Runway 26.

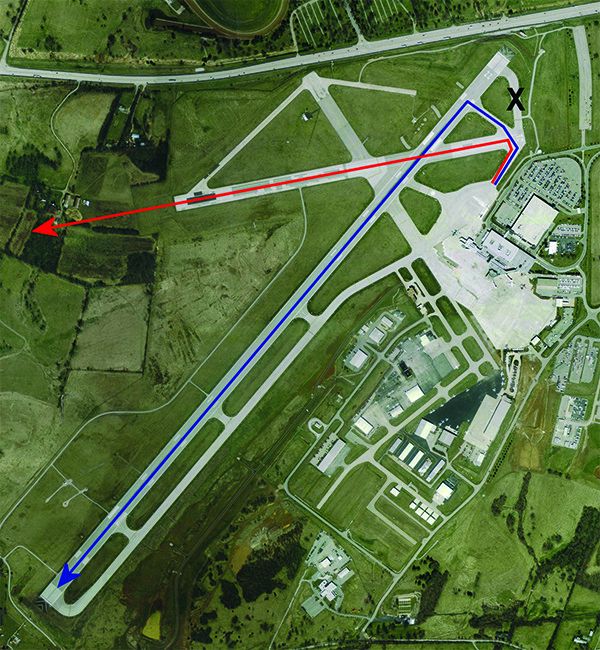

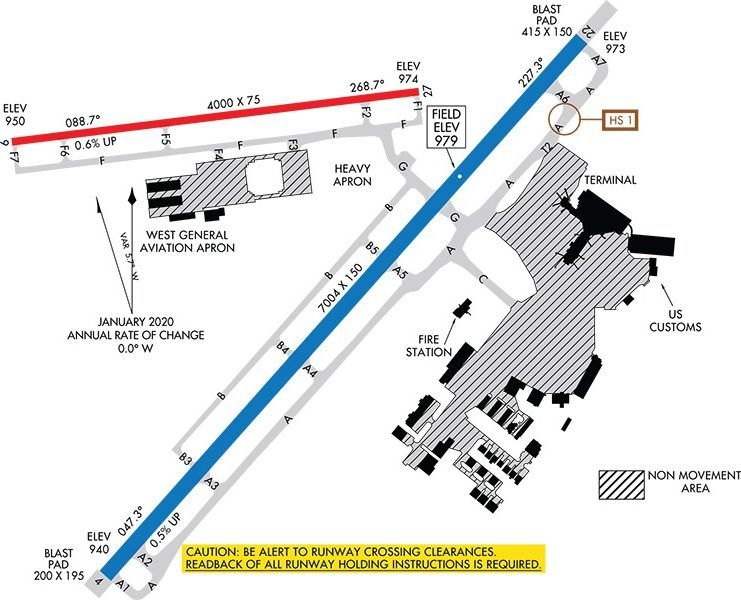

The pilots apparently failed to recognize that a section of Taxiway A between the approach end of Runway 26 and Runway 22 had been closed for maintenance. This was despite a local Notam announcing the closure and low-profile barricades with flashing red lights blocking that part of the taxiway. To reach Runway 22, the crew would have had to use an alternate route. When the crew reached the barricades, however, they turned left onto Runway 26 and began the takeoff roll. The NTSB’s 174-page report notes that while the accident aircraft required at least 3744 feet of runway to reach rotation speed, Runway 26 provided only 3501 feet.

Predictably, the regional jet crashed after running out of runway, destroying the airplane and killing the captain, the flight attendant and 47 passengers. Only the first officer survived, suffering serious injuries that ended his airline career.

AFTERMATH

The NTSB determined the probable cause of this accident was “the flight crewmembers’ failure to use available cues and aids to identify the airplane’s location on the airport surface during taxi and their failure to cross check and verify that the airplane was on the correct runway before takeoff.” Contributing factors were the flight crew’s nonpertinent conversation while taxiing, which resulted in loss of positional awareness, and the FAA’s failure to require specific ATC clearances to cross runways. The NTSB’s report on the accident included five new recommendations, added to the previous five it had issued in response to this accident, plus reiteration of two earlier ones. The sidebar on page 14 summarizes the recommendations for pilots.

The flight crew failed to use a variety of tools available to them to confirm that they had lined up on the correct runway, perhaps most obviously the surface’s number, painted on the pavement. They also didn’t use directional indicators in the cockpit to verify that the airplane’s heading matched the desired runway’s. The runway lights, which are normally turned on for a pre-dawn takeoff, were not. The crew even noted their absence, with the first officer stating about 10 seconds after takeoff power was applied “[that] is weird with no lights,” to which the captain responded, “Yeah,” two seconds later. Absence of illumination might have made it difficult for the flight crew to see runway signage and markings, further contributing to their failure to detect their fatal mistakes.

THE PSYCHOLOGY OF EXPERIENCE

Both pilots were familiar with the airport. The captain had conducted six flight operations at KLEX in the past two years, half of them at night. His most recent flight at the airport was two months before the accident. The first officer conducted 12 flight operations at the airport during the prior three years. Ironically, their airport-specific experience may actually have been one of the root causes of the accident: It’s plausible their previous experience at Lexington—coupled with their overall experience—led the flight crew to operate by routine. Unfortunately, the partial taxiway closure, requiring a different taxi route, was a new piece of information critical to the safety of the flight but it was not integrated into the flight crew’s decision-making. Why not?

The answer might lie in complacency. Research supports the suggestion that habits born out of repeated experience can have damaging effects on safety. Page-Bottorff (2016) notes that “habits make up approximate 40% of human behavior.” He goes on to say, “Many daily actions are driven by routine because it helps conserve mental reserves for concerns that require active consideration. But this also can cause people to perform actions on autopilot when they should be paying attention.”

Additional research shows prior knowledge about a task can inhibit a person’s ability to integrate new knowledge. Wood and Lynch (2002) describe a number of disadvantages of prior knowledge. One is overconfidence, pointing to Alba and Hutchinson (2000) who asserted, “We expect that overconfidence inhibits information search motivated by a goal of reducing knowledge uncertainty.” Buller (2000) describes how overconfidence affected his ability to execute safely the maneuvers needed to fly his military aircraft. Problems included missed radio calls and altitude assignments, as well as the inability to remember flight procedures. Wilson (2010) and Mehta (2017) both went so far as to label complacency a “silent killer.” Focusing on the harm caused by complacency in occupational safety, Wilson recommends teaching people to be more aware of the problems caused by complacency.

This points to a weakness in the FAA’s NOTAM system, as it requires pilots to actively seek out new information and then decide how to integrate it into their decision-making process. The flight crew’s prior experience at Blue Grass Airport combined with the absence of obvious incongruent information may have led to complacency that allowed them to operate by rote instead of actively seeking out potentially important new information.

Ten formal recommendations flowed from the NTSB to the FAA in response to the Comair Flight 5191 accident. While they mostly focus on fractional or commercial operations, many of them are good advice for Part 91 operators. Here’s a summary:

* Require all flight crewmembers to positively confirm and cross-check the airplane’s location before crossing the hold short line for takeoff.

* Require moving-map displays or an automatic system that alerts when takeoff is attempted from a taxiway or unintended runway.

* Require commercial airports to implement enhanced taxiway centerline markings and surface-painted holding position signs at all runway entrances.

* Prohibit a takeoff clearance until after the airplane has crossed all intersecting runways.

* Revise controller guidance to forgo administrative tasks responsible for moving aircraft.

* Provide specific guidance to pilots on the runway lighting requirements for takeoff operations at night.

COMPLACENCY AT WORK

During all of the crew’s prior operations at Blue Grass Airport, the takeoff process was the same: Taxi from the ramp, turn right onto taxiway A, then, at the end of the taxiway, which in the absence of taxiway construction would be the approach end of Runway 22, turn onto the runway and apply takeoff power. In fact, that’s exactly what they did, but with unplanned-for results. On this day, the “end” of the taxiway was the approach end of Runway 26, not Runway 22’s.

While busy with pre-takeoff activities, the flight crew certainly understood that they needed to taxi to the end of Taxiway A. When they encountered the low barrier and flashing lights, they may have simply assumed they had reached the end of the originally configured taxiway. Physical attributes took the place of specific indicators such as taxiway and runway signs. Prior experience at the airport told them that the end of the taxiway was the cue to turn left onto the runway and begin the takeoff. It apparently did not occur to the crew that the barrier and flashing lights meant that something important had changed and that they needed to reevaluate their situation. Operating by rote, the flight crew followed their customary process.

The literature is rife with examples demonstrating industry’s attempts to mitigate the effects of complacency. Zawodniak (2010) recommends practices such as online training modules to reinforce power plant theory, as well as cross-training between different functional areas. One writer noted that once complacency sets in, it is difficult to cure, but asserted that it could be prevented by the establishment of standards of performance and quality production (Naval Safety Center). Furst (2017) suggests that complacency will decrease “if an organization creates a culture that embraces enterprise-wide risk management.”

There is little in the literature, however, presenting substantive evidence of success at incorporating any of the suggested ideas improved an organization’s safety record. This bodes ill for environments such as the one that was found at Blue Grass Airport. If it is difficult to effectively address complacency in a relatively self-contained environment in which everyone belongs to the same organization and is thus under the control of one central authority, it will be even more problematic in an environment characterized by multiple actors all under the guidance of different sets of leadership with little formal opportunities for cooperative action. Like general aviation.

The Blue Grass Airport took a more direct approach. The airport and its runways were reconfigured so that the conditions that existed in 2006 are unlikely to recur. They relocated Runway 26 and redesigned taxiways so that airliners are no longer required to cross the shorter runway on the way to the longer one. With the new runway layout, it now takes a deliberate effort to reach the shorter general aviation runway, and if crews of light aircraft mistakenly select the longer runway, no harm is done.

By redesigning the airport, its managers engineered the many effects of complacency out of the system. Instead of imploring pilots and controllers “to do better” (the unwritten conclusion of the NTSB report), the airport managers recognized that complacency is part of being human and that it is therefore always a danger.

The FAA’s Advisory Circular AC 91-73B, “Parts 91 and 135 Single Pilot, Flight School Procedures During Taxi Operations,” includes numerous recommendations. Those regarding situational awareness apply to all pilots:

* Refer to the current airport diagram and check the assigned taxi route against the diagram with the heading indicator or compass, pay special attention to hot spots/complex intersections and follow the aircraft’s progress on the airport diagram.

* Read back all hold-short instructions and do not cross any runway hold-short lines without a specific clearance.

* Pilots should perform all high-workload duties (e.g., panel configuration and programming) before beginning to taxi.

* Pilots should scan the full length of the runway and for aircraft on final approach. If there is any confusion about the scan results, the pilot should ask ATC to clarify the situation.

* If disoriented, never stop on a runway. ATC should be contacted any time there is a concern about a potential conflict.

* Pilots should be especially vigilant when instructed to line up and wait, particularly at night or during periods of reduced visibility.

EXPERIENCE, COMPLACENCY

The Comair Flight 5191 accident illustrates what can result from experience and complacency. The confidence people obtain after repeatedly performing the same tasks can lead to the belief that the next time the actions needed and results obtained will be the same. The flight crew fell into that trap, which is not necessarily a criticism of the crew. Instead, it is a statement about people in general, and it holds a lesson for all of us. It may not be enough to simply tell people to be aware of the dangers of complacency; we need to be not only mindful of the dangers but we need to design processes and mechanisms that assume that complacency will always be a factor. The reconfiguration of Blue Grass Airport, depicted in the airport diagram on page 13, is an example of designing the effects of complacency out of the system.

*Alba, Joseph and J. Wesley Hutchinson (2000), “Knowledge Calibration: What Consumers Know and What They Think They Know,” Journal of Consumer Research, 27, (September), 123-156.

*Buller, Lt. Tim (2000), “Complacency’s Evil Twin Brother,” Approach: The Naval Safety Center’s Aviation Magazine, Vol. 45, (November), 8-9.

*Furst, Peter G. (2017), “Managing Complacency in Construction,” IRMI, (April), retrieved from (https://www.irmi.com/articles/expert-commentary/managing-complacency-in-construction February 18, 2019.

*Mehta, Benita (2017), “Complacency: The silent killer,” Industrial Safety & Hygiene News (June), retrieved from (https://www.ishn.com/articles/106752-complacency-the-silent-killer), February 1, 2019.

*Naval Safety Center (2008), “Complacency,” Approach: The Naval Safety Center’s Aviation Magazine, (May-June), 29.

*Page-Bottorff, Tim (2016), “The Habit of Safety – Forming, Changing & Reinforcing Key Behaviors,” Professional Safety, (February), 42-43.

*Wilson, Larry (2010) “Complacency The Silent Killer,” Occupational Health & Safety, Vol. 79, (September), 62-65.

*Wood, Stacy L. and Lynch, John G. (2002), “Prior Knowledge and Complacency in New Product Learning,” Journal of Consumer Research, Vol. 29, (December), 416-426.

*Zawodniak, Rodger (2010), “Complacency,” Power Engineering, (August), 8.

Robert Checchio is an instrument-rated private pilot. He holds a PhD in Planning and Public Policy from Rutgers University