For this magazine’s August 2021 issue, I wrote an article titled, “Take A Minute,” where I discussed some valuable advice about slowing things down and analyzing the situation around you. The initial inspiration came from a time where my captain and I were rushing to swap airplanes and when we thought we were done, he sat us down and had us do nothing for a whole minute. Taken out of the hectic environment, both of us were able to collect our thoughts and break the mindset that leads to rushing and carelessness. The article also discussed in-flight scenarios where it was imperative to act immediately, distinguishing them from those where taking your time and working through all your options was more beneficial.

Well, wouldn’t you know: Soon I was sitting in recurrent training and we started discussing aeronautical decision-making (ADM) and crew resource management (CRM). A slide pops up about Naturalistic vs. Optimum Decision-Making. Part of me was bummed that my article did not have an even remotely original thought. Another, larger part was intrigued that what I discussed in that article had a fancy name and was heavily studied by psychologists. Even more interesting, it was not necessarily geared toward aviation.

HUMAN FACTORS

One thing that has always fascinated me about aviation is how it tends to bleed into other aspects of life. I have known pilots who do a quick walkaround of their car every morning or implemented flows and checklists to their day-to-day life to help prevent forgetting or missing something important. Aviation’s deep dive into human factors and implementation of ADM, CRM and positive safety culture has saved countless lives. Other industries, such as the medical field, have used the same techniques to prevent errors and reduce complacency.

That is why I enjoy studying human factors so much, because the field is applicable to all aspects of life. An engine failure after takeoff can lead to the same decision-making process as the beginning of a car crash, or even something completely outside vehicular travel.

There was a study done on fear, and how humans react when faced with life-or-death situations. One discussion involved a woman who encountered a mountain lion while on a hike. After being initially frozen by fear, she was eventually able to find a defensible position and injure the mountain lion enough that it lost interest in her.

This example could be a case study on naturalistic decision-making, showing how our brains work under extreme pressure. Understanding how and why our brains work this way can help us navigate challenging scenarios and combat paralysis and extreme fear, and can have direct applications in the cockpit.

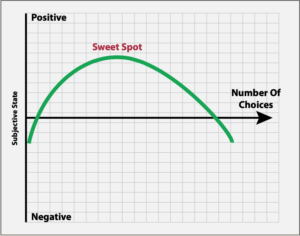

Analysis paralysis is defined as the inability to make a decision due to overthinking a problem. Extreme fear is a term coined by scientists attempting to study the drastic difference in performance between humans in life-or-death scenarios. Why does one person freeze like a deer in the headlights, while another takes immediate action? One theory that made sense to me was it is an evolved response. If you are walking in the wild and stumble upon a predator, immediately freezing in your tracks can prevent the predator from discovering you and allow you to fully assess the situation.

Both phenomena contribute to startle factor, known as an inappropriate result to an unexpected event. Part of the benefit of studying how the brain works is you can recognize when there is a problem and combat it. If you recognize yourself waffling back and forth, force yourself to decide before the passage of time makes it for you.

NATURALISTIC DECISIONS

Naturalistic, or automatic, decision-making originated from the desire to study how people make complex decisions in real-world situations. Initial research stemmed from the U.S. Army and Navy, which makes sense when you consider the challenges of training for combat and other scenarios that require making quick choices with extremely high stakes. Research found a common factor in situations with undesirable outcomes was a form of tunnel vision. Decisions were made and committed to, without assessing all available information and exploring alternative options.

Obviously, emergencies and abnormalities have varying levels of time sensitivity. Proper reaction to an engine failure at 200 feet above the runway, for example, is equal parts instinct as it is actual decision-making. For example, if the natural response is not to initially reduce the angle of attack, there may not be any additional decisions to make. If the aircraft remains in control, the pilot may only be able to run the Recognition-Primed Decision-Making Model once before impact. It would go something like this:

1. Option: Land straight ahead.

2. Good Enough? Yes.

3. Land straight ahead.

Even in this simplified model, additional options present themselves. Should you land straight ahead on the golf course or the pond slightly to the right? An example where tunnel vision can derail quality decision-making is the impossible turn. Almost every flight has the end goal of landing on a runway. This decision is often forced, even if it is not viable:

1. Option: Return to the runway.

2. Good Enough? No.

3. Loss-of-control accident.

REQUIRED ACTIONS

There are two important factors to consider in naturalistic decision-making. First and foremost, you need to have some experience with the options available. The easiest method for determining if an option is viable is having exercised it before, or at least been through something similar enough, perhaps in training, to draw conclusions about success rates.

The second factor is education, which fills in the gaps where experience would be too hazardous to justify or just take too long to be practical. Continuing the above example, we discuss engine failures after takeoff in the classroom because most of us prefer to not intentionally tinker with our engines at low altitudes.

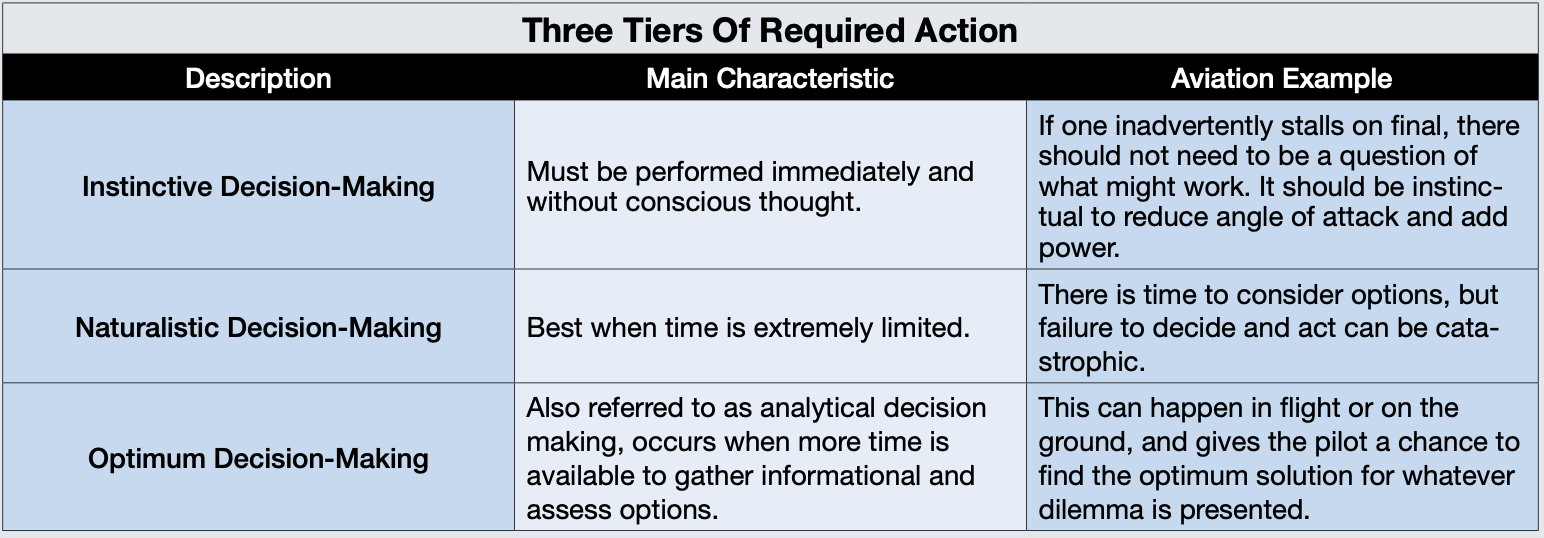

Typically, when we consider these models, there is no time to complete a full risk assessment and consider every option from every angle. A few expressions come to mind: “Do not let the perfect get in the way of the good” and “An okay decision made now is better than a perfect decision made too late” are examples. Notice how the models do not state a perfect or ideal outcome. Flying is a complex thing. One must gather all information available, make a decision and implement it. I would propose there are three tiers of required action, and every situation falls somewhere on the spectrum. The table above details these three tiers of required action: Instinctive, Naturalistic and Optimum.

OPTIMUM DECISION-MAKING

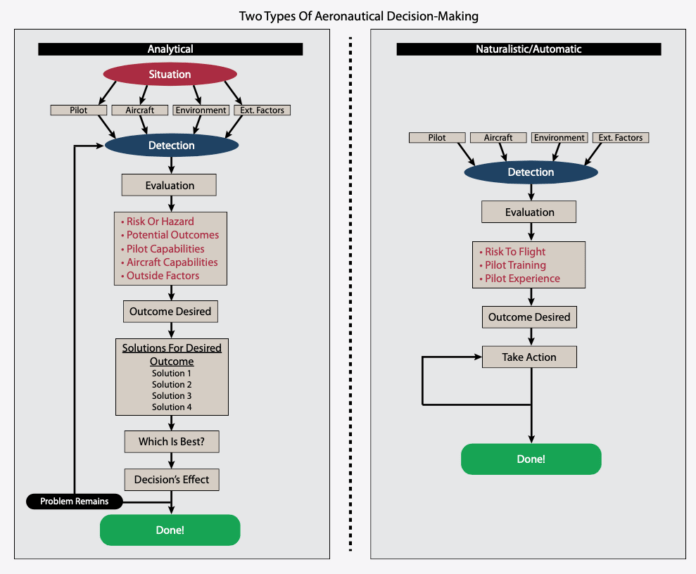

Like every good CFI, I memorized and taught most of the common ADM acronyms. PAVE, DECIDE, the 3 Ps, the 5 Ps, CARE, TEAM. The acronyms are handy, and it helps to explore several different pathways from potential risk to a safe outcome. The first thing to point out, though, is the length and complexity of the analytical decision-making tree, reproduced on page 4. For the most part, the two steps that are the most involved are evaluations and solutions.

Given more time, most of us would like to gather as much data as possible and come up with several solutions. In optimum decision-making, we can strive for the best answer. It reminds me of the SATs and other multiple-choice tests, including FAA knowledge exams, where they can be more than one right answer but there is always one that is the “most correct.”

With both models, the first step is always soliciting information. With the naturalistic model, typically the problem presents itself in the cockpit as a mechanical anomaly or something like rapidly degrading weather. Outside of the cockpit and those time-pressing issues, we can gather information more comfortably.

Weather briefings, reviewing Notams and preflighting are all examples of this. The best part is if an issue or roadblock pops up, you have options aplenty. Something invaluable that hopefully everyone develops over their flying career is a network of mentors, friends, and instructors to call and ask for advice. Options might be more limited in flight, but ATC, FSS and even EFBs give pilots more information that we can possibly need.

Once all pertinent (or available) information is gathered, it is time to come up with solutions. Experience and education are still invaluable during this step, but the pressures of making a time-sensitive decision are not present. Instead of just finding a solution that works, risk assessments can be conducted.

We can extend the above example of an engine failure to fit into optimum decision-making. Consider an engine failure at say, 8000 feet agl. There are some items that need to be completed immediately, but once the aircraft is configured and on-speed there are some decisions to be made. Ideally, a turn to the nearest suitable airport would be first.

Of course, even this decision can be multifaceted. Assume the airplane can glide 15 miles, and there is one airport five miles away and another one 10 miles away. Which do you choose? What if the airport 10 miles away has a much longer runway, and emergency services available? What if you have to glide into a headwind to get there, while the less-well-equipped-airport option comes with a tailwind? Both choices will probably work, but which most increases the chances of a favorable outcome?

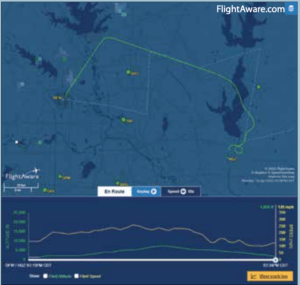

Optimum decision-making has pitfalls, especially if one is weighing the importance of other factors over safety of flight. The April 23, 2020, mishap involving N477SS, a PC-12/47 that crashed short of the Mesquite (Texas) Metro Airport (HQZ) after experiencing loss of engine power, seems to be a textbook example.

Initially, the solo pilot elected to divert to the Rockwell (Texas) Airport (F46) when they first experienced engine difficulties. Apparently, the engine stabilized, so the pilot elected to return to DFW. Rather than pick the closest suitable airport, the pilot ostensibly chose the airport with the most facilities. Pilots often put pressure on themselves to return their aircraft to base, or at least somewhere it can get fixed. Who wants to strand a broken plane somewhere without any maintenance? In the end, the plane lost engine power again, and ended up in a muddy field short of a third airport. As of this writing, the NTSB has not yet determined this accident’s probable cause.

When using optimum decision-making, it is important to not let the extra time to consider options convolute a good decision. The pilot initially made the best decision, which was to land at the nearest suitable airport. Even if engine power came back, it is never a safe bet to continue, especially for fear of inconvenience. I have seen too many pilots nurse a sick airplane back to base, because that is where the car is parked. Choose the safest path. Worry about the logistics on the ground.

Do not let tempting options about maintenance or facilities trick you into thinking that perfectly serviceable runway below you is not the best option. Once a safe decision is made, stick with it and focus on execution.

CHOICES, CHOICES

So why study this? Ultimately, what makes us pilots is not our superhuman ability to slam yokes around and jockey power. When we study accident case reports, it is rare that poor pilot technique is solely to blame. It can certainly contribute, especially if poor pilot technique is used to overcome poor decision-making. A base-to-final stall/spin accident, for example, is poor technique but ultimately the root cause is bad pattern planning and a decision to force the issue. Since it is not prudent to experience every dicey situation, we read case studies and accident reports.

We (hopefully) thoroughly debrief any abnormal experiences we have. Follow the flowcharts on successful and failed outcomes. Where did the failures occur? When did a successful outcome happen despite all odds? Understanding the reason for success is an excellent first step toward achieving it ourselves.

Ryan Motte is a Massachusetts-based Part 135 pilot, flight instructor and check airman. He moonlights as Director of Safety when he isn’t flying.